@Christian_Voelker i don’t see nothing relevant in samba logs…

About the strace i get this

fcntl(38, F_SETFD, FD_CLOEXEC) = 0

getpid() = 1825

fcntl(10, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(10, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(10, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

close(38) = 0

fcntl(10, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(10, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(11, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(11, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(12, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(12, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(14, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(14, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(15, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(15, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(13, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(13, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(11, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(12, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(14, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(15, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(13, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(10, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

close(37) = 0

epoll_create(64) = 37

fcntl(37, F_GETFD) = 0

fcntl(37, F_SETFD, FD_CLOEXEC) = 0

getpid() = 1825

epoll_create(64) = 38

fcntl(38, F_GETFD) = 0

fcntl(38, F_SETFD, FD_CLOEXEC) = 0

getpid() = 1825

fcntl(10, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(10, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(10, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

close(38) = 0

fcntl(10, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(10, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(11, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(11, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(12, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(12, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(14, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(14, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(15, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(15, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(13, F_SETLK, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=168, l_len=40000}) = 0

fcntl(13, F_SETLKW, {l_type=F_RDLCK, l_whence=SEEK_SET, l_start=40168, l_len=0}) = 0

fcntl(11, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(12, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(14, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(15, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(13, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

fcntl(10, F_SETLKW, {l_type=F_UNLCK, l_whence=SEEK_SET, l_start=168, l_len=0}) = 0

close(37) = 0

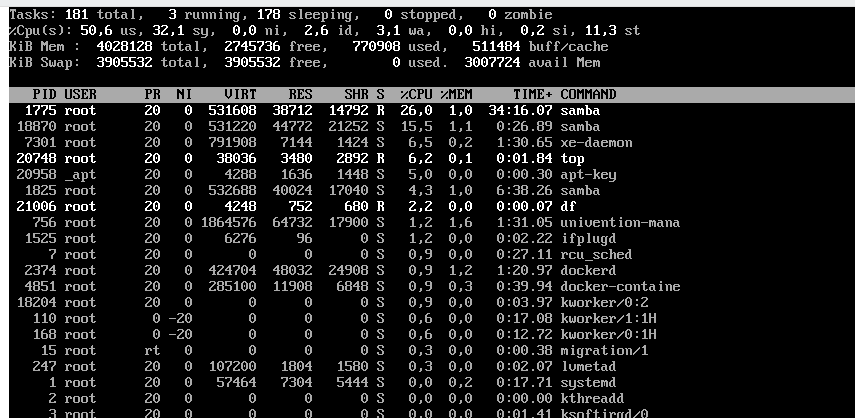

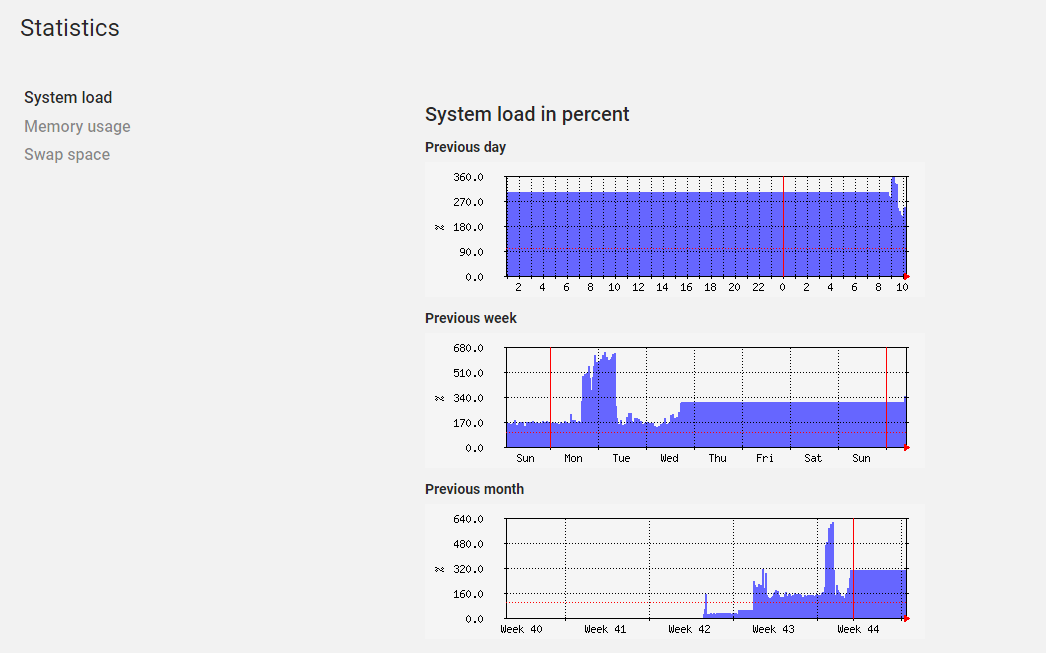

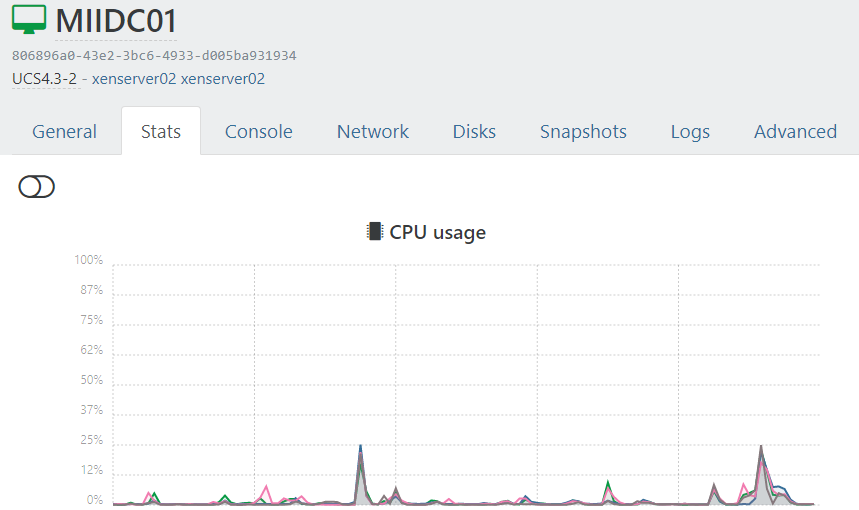

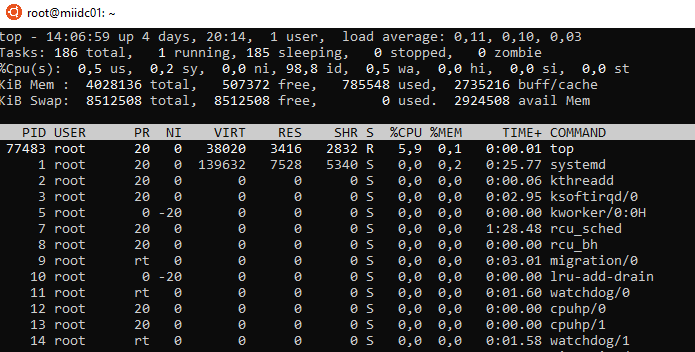

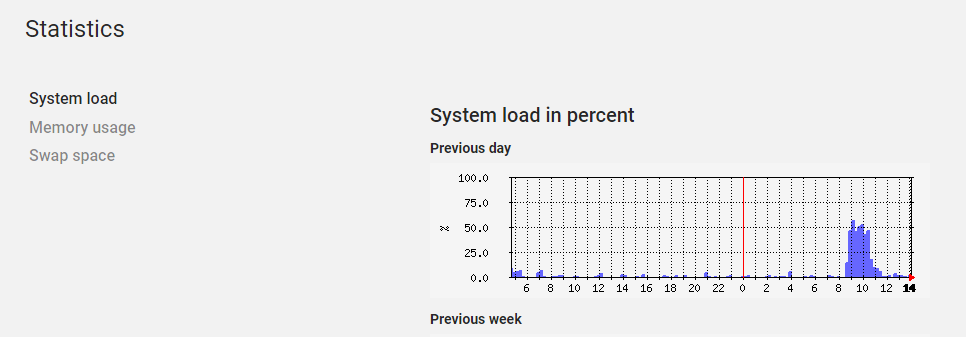

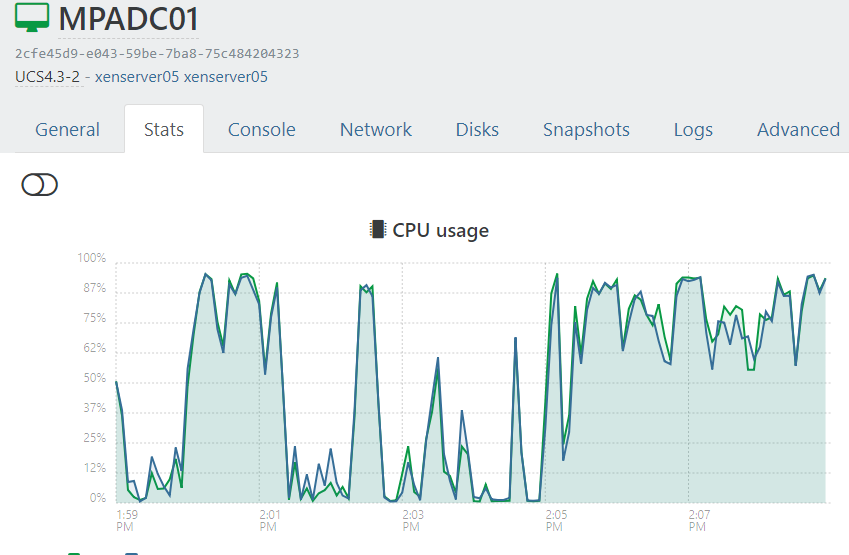

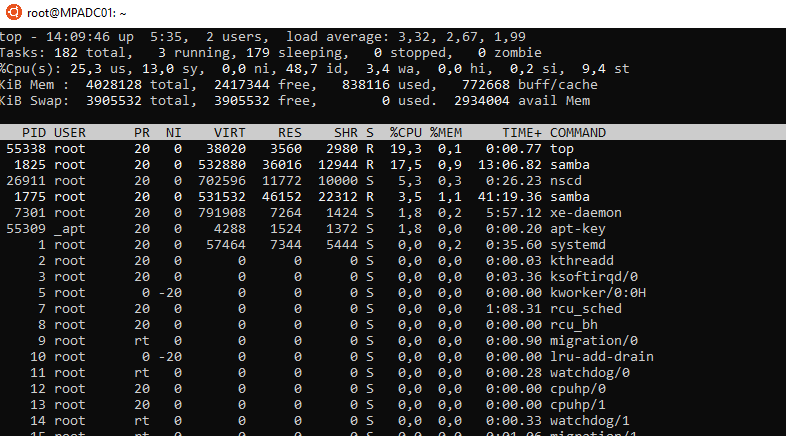

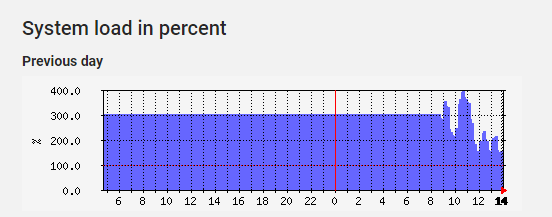

The last days i keep an eye before post… and restart the samba sometimes… but never have better results

This server have the latest errata…